1.4. Adjust Counting#

Adjusted Counting methods improve upon simple “counting” quantifiers by correcting bias using what is known about the classifier’s errors on the training set. They aim to produce better estimates of class prevalence (how frequent each class is in a dataset) even when training and test distributions differ.

see Counters For Quantification for an overview of the base counters for quantification.

Currently, there are two types of adjustment methods implemented:

Threshold Adjustment Methods: These methods adjust the decision threshold of the classifier to optimize prevalence estimation. Examples include Adjusted Count (TAC) and its threshold selection policies (TX, TMAX, T50, MS, MS2).

Matrix Adjustment Methods: These methods use a confusion matrix derived from the classifier’s performance on a validation set to adjust the estimated prevalences. Examples include the EM-based methods and other matrix inversion techniques.

1.4.1. Threshold Adjustment#

Threshold-based adjustment methods correct the bias of CC by using the classifier’s True Positive Rate (TPR) and False Positive Rate (FPR).

They are mainly used for binary quantification tasks.

Threshold Adjusted Count (TAC) Equation

- caption:

Corrected prevalence estimate using classifier error rates

The main idea is that by adjusting the observed rate of positive predictions, we can better approximate the real class distribution.

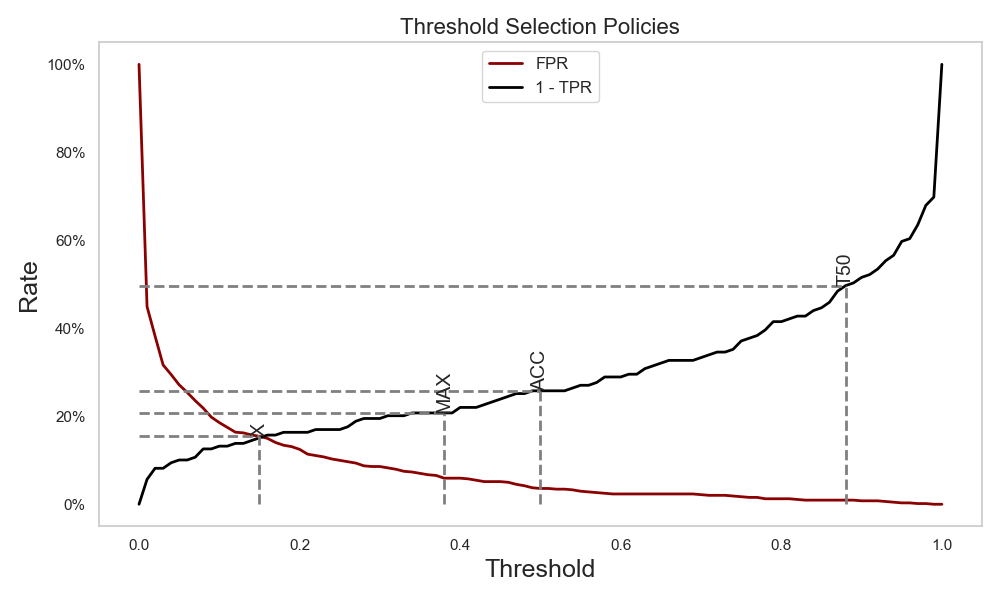

Comparison of different threshold selection policies showing FPR and 1-TPR curves with optimal thresholds for each method [Adapted from Forman (2008)]#

Different threshold methods vary in how they choose the classifier cutoff \(\tau\) for scores \(s(x)\) .

All these methods have their fit, predict and aggregate functions, similar to other aggregative quantifiers. However, they also include a specialized method: get_best_thresholds, which identifies the optimal threshold, given y and predicted probabilities. Here is an example of how to use the T50 method:

from mlquantify.adjust_counting import T50, evaluate_thresholds

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression()

thresholds, tprs, fprs = evaluate_thresholds(

y=y_test,

probabilities=clf.predict_proba(X_test)[:, 1]) # binary proba

q = T50()

best_thr, best_tpr, best_fpr = q.get_best_thresholds(X_val, y_val)

print(f"Best threshold: {best_thr}, TPR: {best_tpr}, FPR: {best_fpr}")

Note

Threshold adjustment methods like ACC are primarily designed for binary classification tasks, For multi-class problems, matrix adjustment methods are generally preferred.

1.4.2. Matrix Adjustment#

Matrix-based adjustment methods use a confusion matrix or generalized rate matrix to adjust predictions for multi-class quantification. They treat quantification as solving a small linear system.

Matrix Equation

- caption:

General linear system linking observed and true prevalences

Here:

\(\mathbf{y}\): average observed predictions in \(U\)

\(\mathbf{X}\): classifier behavior from training (mean conditional rates)

\(\hat{\pi}_F\): corrected class prevalences in \(U\)

[Plot Idea: Matrix illustration showing how confusion corrections map to estimated prevalences]

1.4.2.1. Adjusted Count (AC) and Probabilistic Adjusted Count (PAC)#

from mlquantify.adjust_counting import AC, PAC

from sklearn.linear_model import LogisticRegression

q = AC(learner=LogisticRegression())

q.fit(X_train, y_train)

q.predict(X_test)

# -> {0: 0.48, 1: 0.52}

Both AC and PAC are solved using this linear system:

AC uses hard classifier decisions (confusion matrix).

PAC uses soft probabilities \(P(y=l|x)\) .

1.4.2.2. Friedman’s Method (FM)#

The FM constructs its adjustment matrix \(\mathbf{X}\) based on a specialized feature transformation function \(f_l(x)\) that indicates whether the predicted class probability for an item exceeds that class’s proportion in the training data \((\pi_l^T)\) , a technique chosen because it theoretically minimizes the variance of the resulting prevalence estimates.

Mathematical details - Friedman’s Method

To improve stability, Friedman’s Method (FM) generates the adjustment matrix \(\mathbf{X}\) using a special transformation function applied to each class \(l\) and training sample \(x\) :

where:

\(I[\cdot]\) is the indicator function, equal to 1 if the condition inside is true, 0 otherwise.

\(\hat{P}_T(y = l \mid x)\) is the classifier’s estimated posterior probability for class \(l\) on training sample \(x\).

\(\pi_l^T\) is the prevalence of class \(l\) in the training set.

The entry \(X_{i,l}\) of the matrix \(\mathbf{X}\) is computed as the average of \(f_l(x)\) over all \(x\) in class \(i\) of the training data:

where:

\(L_i\) is the subset of training samples with true class \(i\).

\(|L_i|\) is the number of these samples.

This matrix is then used in the constrained least squares optimization:

to estimate the corrected prevalences \(\hat{\pi}_F\) on the test set [3].

This thresholding on posterior probabilities ensures that the matrix \(\mathbf{X}\) highlights regions where the classifier consistently predicts a class more confidently than its baseline prevalence, improving statistical stability and reducing estimation variance [3].

References